As software engineers, sometimes our job is to come up with a solution to a problem that requires some sort of algorithm. An algorithm, at a high level, is just a set of directions – the recipe to solve a problem. When do we get to a point where we know the “recipe” we have written to solve our problem is “good” enough?

This is where Big O Notation comes in. The Big O Notation is used to describe two things: the space complexity and the time complexity of an algorithm. In this article, we cover time complexity: what it is, how to figure it out, and why knowing the time complexity – the Big O Notation – of an algorithm can improve your approach.

Time Complexity

The Big O Notation for time complexity gives a rough idea of how long it will take an algorithm to execute based on two things: the size of the input it has and the amount of steps it takes to complete. We compare the two to get our runtime. Time complexity measures how efficient an algorithm is when it has an extremely large dataset. We look at the absolute worst-case scenario and call this our Big O Notation.

O(1)

The first Big O measurement we talk about is constant time, or O(1) (oh of one). When we talk about things in constant time, we are talking about declarations or operations of some sort:

//variable declarations

let number = 9;

//evaluating arithmetic expressions

let x = 5 + 7;

if(x < 5) {

//other code here that is evaluated separately

}Pretty much anything evaluated only one time in our algorithm is counted as constant time.

When evaluating overall running time, we typically ignore these statements since they don’t factor into the complexity. Be aware that O(1) expressions exist and why an expression might be O(1) as opposed to any other possible value.

O(n)

Take a look at this example:

const exampleOne = (arr) => {

for(let i = 0; i < arr.length; i++) {

console.log(arr[i].first_name + " " + arr[i].last_name);

}

}

let arr1 = [{

"id": 1,

"first_name": "Mina",

"last_name": "Strain",

"email": "mstrain0@sfgate.com"

}, {

"id": 2,

"first_name": "Reinhold",

"last_name": "Adicot",

"email": "radicot1@sun.com"

}, {

"id": 3,

"first_name": "Tades",

"last_name": "Mundy",

"email": "tmundy2@patch.com"

}, {

"id": 4,

"first_name": "Sawyere",

"last_name": "Ingre",

"email": "singre3@pinterest.com"

}, {

"id": 5,

"first_name": "Amber",

"last_name": "Reddington",

"email": "areddington4@1und1.de"

}];

let arr2 = [{

/*

Go to mockaroo.com and create fake JSON data that has 100 rows. Preview the array and copy/paste it here.

*/

}];

exampleOne(arr1);

//exampleOne(arr2); //uncomment this when ready to testTake a look at the first dataset of the example. What is the length of the array? Take a look again, but this time at the second data set you created by going to mockaroo.com – what is the length of that array?

Now let’s look at the actual function since the length of our input is known. To figure out the Big O of an algorithm, take a look at things block-by-block and eliminate the non-essential blocks of code. Once you have cancelled out what you don’t need to figure out the runtime, you can figure out the math to get the correct answer.

In this example, we have a for loop. That for loop iterates over every item in the array we pass to it. Because the code has to touch every single element in the array to complete its execution, it’s linear time, or O(n).

Final Thoughts on O(n):

Because we describe Big O in terms of worst-case scenario, it doesn’t matter if we have a for loop that’s looped 10 times or 100 times before the loop breaks. The rate of growth in the amount of time as the inputs increase is still linear.

One final example:

Take the same function as above, but add another block of code to it:

const exampleTwo = (arr) => {

for(let i = 0; i < arr.length; i++) {

console.log(arr[i].first_name + " " + arr[i].last_name);

};

for(let i = 0; i < arr.length; i++) {

console.log(arr[i].first_name + " " + " - CareerKarma Student");

}

}What would be the runtime of this function?

Technically, it’s O(2n), because we are looping through two for loops, one after the other.

However, when expressing time complexity in terms of Big O Notation, we look at only the most essential parts. This means the coefficient in 2n – the 2 – is meaningless. It doesn’t matter how many loops we have stacked on top of each other – the Big O will still be O(n) because we are looping through the same array.

One thing to note:

If you are creating an algorithm that is working with two arrays and you have for loops stacked on top of each other that use one or the other array, technically the runtime is not O(n), unless the lengths of the two separate arrays are the same.

When handling different datasets in a function – in this case two arrays of differing lengths – we count that separately. We use another variable to stand for the other array that has a different length.

const exampleThree = (arr1, arr2) => {

for(let i = 0; i < arr1.length; i++) {

console.log(arr[i].first_name + " " + " - CareerKarma Career Coach");

};

for(let i = 0; i < arr2.length; i++) {

console.log(arr[i].first_name + " " + " - CareerKarma Student");

}

}The time complexity of this problem is O(n + m). The n here is one array and its elements; the m is the other array and its elements. Because we are dealing with two different lengths, and we don’t know which one has more elements, it cannot quite be reduced down to O(n).

Basics of Logarithms and Exponents

Before we talk about other possible time complexity values, have a very basic understanding of how exponents and logarithms work.

Many see the words “exponent”, “log” or “logarithm” and get nervous that they will have to do algebra or math they won’t remember from school. This is not the case! In this section, we look at a very high level about what a log is, what an exponent is, and how each compares to the runtime of an O(n) function.

To look at logarithms and how they work, remind ourselves of how exponents work. The syntax for raising something to an exponent is:

xy = z

We commonly read this as “x to the y power equals z”. The variable z is x multiplied by itself y times.

Logarithmic syntax is:

logx z = y

Read this as “log base x of z equals y”. Look how the variables compare to the previous equation. If we take the base, raise it to the result, we get the number we’re trying to take the log of. It’s basically the inverse of what an exponent is.

"Career Karma entered my life when I needed it most and quickly helped me match with a bootcamp. Two months after graduating, I found my dream job that aligned with my values and goals in life!"

Venus, Software Engineer at Rockbot

An important takeaway here is when we deal with exponents, we deal with a result that is a large number. When we deal with logarithms, we deal with a smaller number as the result.

So…how does this connect with Big O Notation? You’ll see in the next few sections!

O(nx)

So far, we have talked about constant time and linear time. O(n2), a version of O(nx) where x is equal to 2, is called quadratic time. This means as the size of the input increases, the number of steps to solve the problem in the worst-case is squared or raised to the x power.

This can happen when we need to nest loops together to compare an i-th value to another value in an array. See if there are duplicates in an array:

const exampleArr = [1, 5, 13, 45, 2, 5, 31, 9, 45];

const exampleFour = (arr) => {

for(let i = 0; i < arr.length; i++) {

for(let j = 0; j < arr.length; j++) {

if(arr[i] === arr[j] && j !== 0) {

return true;

}

}

};

return false;

}The first loop marks our i-th placement in the array. The second loop looks at every other index in the array to see if it matches the i-th index. If none match and it gets to the end of the loop, the i-th pointer moves to the next index.

This means we look at each index twice in our algorithm. This makes, in this example, an array with a length of 9 take at worst-case take 81 (92) steps. For small datasets, this runtime is acceptable. But when we increase the dataset drastically (say to 1,000,000,000 entries), O(nx) runtime doesn’t look so great.

Final thoughts on O(nx)

Always try to create algorithms with a more optimal runtime than O(nx). You are likely to be dealing with a set of data much larger than the array we have here. Next, let’s take a look at the inverse of a polynomial runtime: logarithmic.

O(log n)

Imagine a phone book. If we are to give you a person’s name and you are to look it up, how will you go about doing that?

An algorithm that starts at the beginning of the book and runs through every name until it gets to the name it’s looking for, runs in O(n) runtime – the worst case scenario is that the person you are looking for is the very last name.

What can we do to improve on that? How can we make it better than linear runtime?

We can do an algorithm called binary search. We won’t go over the ins and outs of how to code out binary search, but if you understand how it works through some pseudocode, you can see why it’s a little bit better than O(n).

Pseudocode for Binary Search

Consider this: a phone book as an array of objects where each object has a firstName, lastName and phoneNumber. Here’s a snippet:

const phoneBook = [{

"firstName": "Mina",

"lastName": "Adicot",

"phoneNumber": "123-456-7890"

}, {

"firstName": "Reinhold",

"lastName": "Atherton",

"phoneNumber": "908-765-4321"

},- Since the phone book is already sorted by last name, we can see if the midpoint’s lastName property matches the search term’s last name.

- If not, and the first letter comes after the current midpoint’s last name’s first letter, we do away with the first half.

- If it comes before, take away the second half.

- If it’s equal, look at the next letter and compare the substrings to each other using steps 1-3.

- Keep doing this action until we find the answer. If we don’t find the answer, say so.

This is called binary search. It has a O(log n) runtime because we do away with a section of our input every time until we find the answer.

Final Thoughts on O(log n)

Recall our basic logarithm equation. The result when we take a log of a number is always smaller. If O(n) is linear and O(n2) takes more steps, then O(log n) is slightly better than O(n) because when we take the log of n it’s a smaller number.

O(2n)

O(2n) typically refers to recursive solutions that involve some sort of operation. The Fibonacci sequence is the most popular example of this runtime. This particular example will return the nth number in the Fibonacci sequence:

const exampleFive = (n) => {

if(n === 0)

return 0;

}

else if(n === 1)

return 1;

}

else{

return exampleFive(n - 1) + exampleFive(n - 2);

}

}This solution increases the amount of steps needed to complete the problem at an exponential rate. Avoid this particular runtime at all costs.

O(n log n)

The O(n log n) runtime is very similar to the O(log n) runtime, except that it performs worse than a linear runtime. Essentially, what an O(n log n) runtime algorithm has is some kind of linear function that has a nested logarithmic function. Take this example:

const n = 2000;

const exampleSix = (n) => {

let sum = 0

for (let i = 0; i < n; i++){

let j = 1

while j < n {

j *= 2

sum += 1

}

}

}In this code snippet, we are incrementing a counter starting at 0 and then using a while loop inside that counter to multiply j by two on every pass through – this makes it logarithmic since we are essentially doing large leaps on every iteration by using multiplication.

Since it’s nested we multiply the Big O notation values together instead of add. We add when we have separate blocks of code. O(n) x O(log n) === O(n log n).

O(n!)

To have a runtime of O(n!), the algorithm has to be extremely slow, even on smaller inputs. One of the more famous simple examples of an algorithm with a slow runtime is one finds every permutation in a string.

In this algorithm, as the length of your input increases, the number of returned permutations is the length of input ! (factorial).

permutation('a') // 1 "a"

permutation('ab') // 2 "ab", "ba"

permutation('abc') // 6 "abc", "bca", "cab", "cba", "acb", "bac"

permutation('abcd') // 24 permutation('abcde') // 120

num of permutations is the length of the input factorial.Factorial, if you recall is the nth number multiplied by every number that comes before it until you get to 1.

const factorial = (n) => {

if(n < 2) {

return 1;

} else {

return n * factorial(n - 1);

}

};If we look at a length of 3, for example, we multiple 3 x 2 x 1 === 6. Six is 3!.

It doesn’t take a very long or very large input for an algorithm to take a really long time to complete when the runtime is this slow. At all costs, try to find something more efficient if you can. This is okay for a naive or first-pass solution to a problem, but definitely needs to be refactored to be better somehow.

Basic Rules to Remember

There are some basic things to remember when trying to figure out the time complexity of a function:

- Constants are good to be aware of but don’t necessarily need to be counted. This includes declarations, arithmetic operations, and coefficients or multiples of the same runtime (i.e. if we have two loop stacked on top of each other with same runtime, we don’t count it as O(2n) – it’s just O(n).

- Big O Notation only concerns itself with the upper bound, or worst-case scenario when it comes to time complexity.

- When you have multiple blocks of code with different runtimes stacked on top of each other, keep only the worst-case value and count that as your runtime. It’s the most significant block of code in your function that will have an effect on the overall complexity.

Conclusion

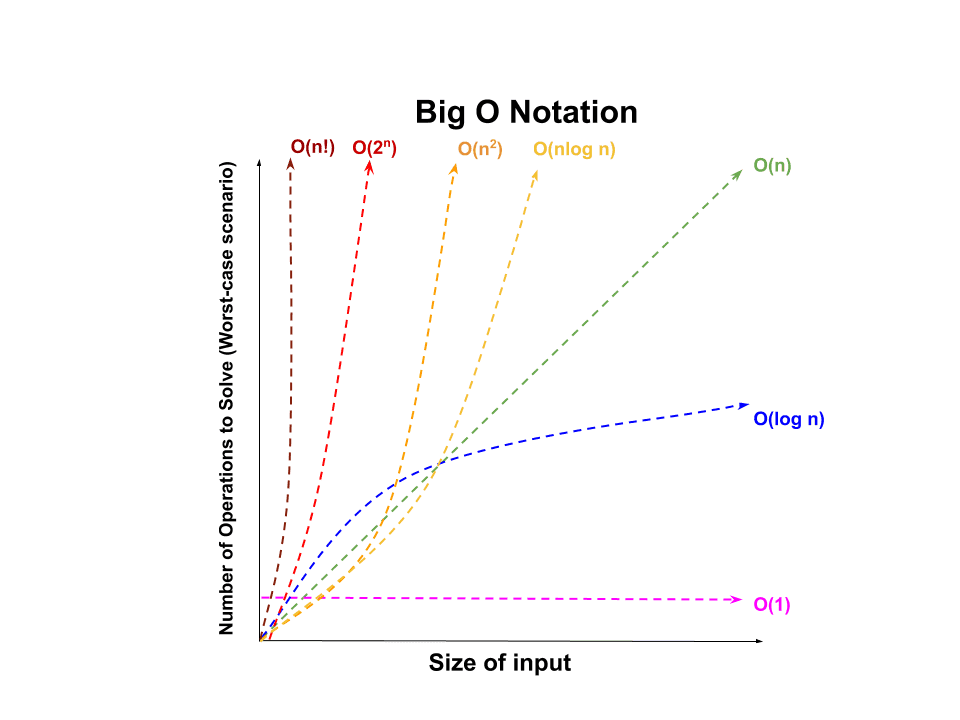

To recap, the Big O Notation can have two meanings associated with it: time complexity and space complexity. In this article we’ve looked closely at time complexity. This is figured as how much time it takes for the algorithm to complete when its input increases. This is important when we interact with very large datasets – which you are likely to do with an employer. Here is a graph that can serve as a cheat sheet until you get to know the Big O Notation better:

Being aware of the Big O Notation, how it’s calculated, and what would be considered an acceptable time complexity for an algorithm will give you an edge over other candidates when you look for a job.

About us: Career Karma is a platform designed to help job seekers find, research, and connect with job training programs to advance their careers. Learn about the CK publication.